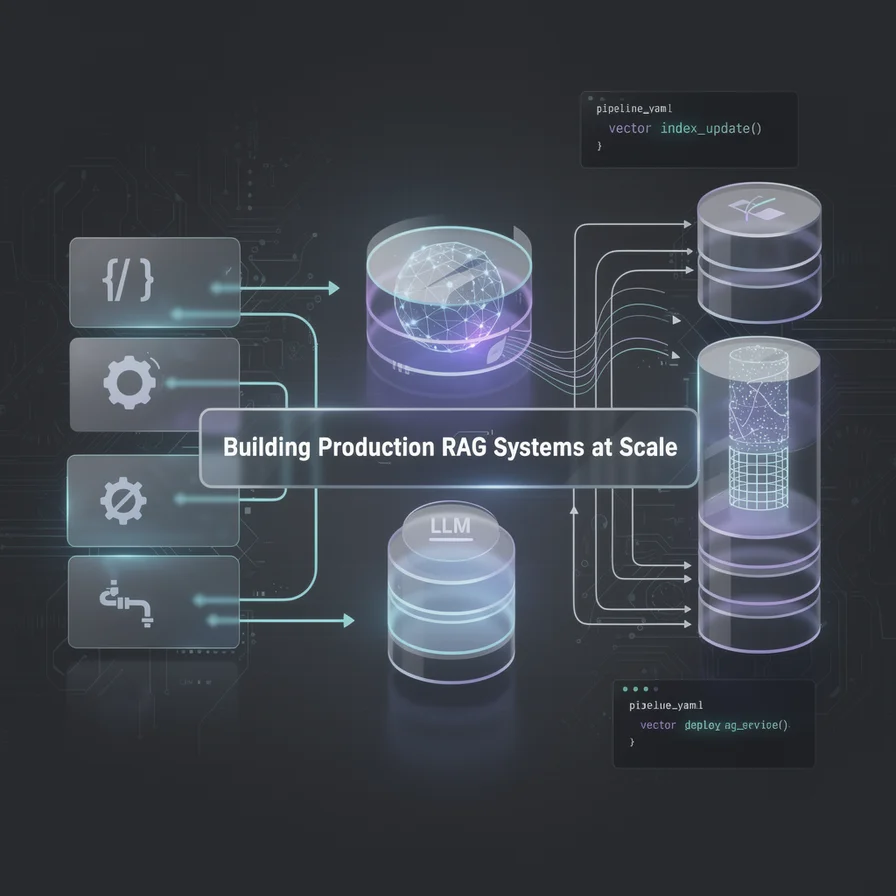

Building Production RAG Systems at Scale

A comprehensive guide to architecting production-ready RAG systems, covering vector database selection, chunking strategies, embedding pipelines, and LLM orchestration at scale.

I’ve built more RAG (Retrieval-Augmented Generation) systems in the past 18 months than I care to count. What started as a few prototype chatbots quickly evolved into production systems serving millions of queries, powering customer support, internal knowledge bases, and AI-powered documentation search across multiple organizations.

The gap between “RAG demo that works on my laptop” and “RAG system that serves 10,000 concurrent users with sub-second latency” is enormous. Most tutorials stop at the prototype phase—they show you how to embed some documents and query them with LangChain. What they don’t show you is what happens when your knowledge base grows to 10 million chunks, when your embedding costs hit $5,000/month, or when users start asking questions your retrieval system can’t handle.

This post is the guide I wish I had when I started building production RAG systems. I’ll cover the architecture decisions, performance optimizations, and hard-learned lessons from running RAG at scale.

The RAG Architecture Nobody Talks About

Most RAG tutorials show you this simple flow:

User Query → Embed Query → Search Vector DB → Retrieve Docs → Send to LLM → ResponseThis works great for demos. In production, your architecture looks more like this:

User Query

→ Query Analysis & Intent Detection

→ Multi-stage Retrieval (Hybrid Search)

→ Reranking & Relevance Scoring

→ Context Compression

→ LLM Generation with Streaming

→ Response Validation & Citation

→ Monitoring & Feedback LoopLet me break down each stage and why it matters.

Stage 1: Query Analysis and Intent Detection

The biggest mistake I see in RAG implementations is treating every user query the same way. A question like “What is Kubernetes?” requires different retrieval than “Show me the pricing page” or “How do I configure SSL certificates?”

I implement a lightweight query classifier before the retrieval stage:

from openai import OpenAI

from enum import Enum

class QueryIntent(Enum):

FACTUAL = "factual" # Needs comprehensive context

NAVIGATIONAL = "navigational" # Looking for specific page/doc

PROCEDURAL = "procedural" # Step-by-step instructions

TROUBLESHOOTING = "troubleshooting" # Error resolution

async def classify_query(query: str, client: OpenAI) -> QueryIntent:

"""

Use a fast, cheap LLM to classify query intent.

This drives retrieval strategy downstream.

"""

response = await client.chat.completions.create(

model="gpt-4o-mini", # Fast and cheap for classification

messages=[

{

"role": "system",

"content": """Classify the user's query intent into one of these categories:

- factual: General knowledge questions

- navigational: Looking for specific page or document

- procedural: How-to questions requiring steps

- troubleshooting: Error messages or problems to solve

Respond with only the category name."""

},

{"role": "user", "content": query}

],

temperature=0,

max_tokens=10

)

intent_str = response.choices[0].message.content.strip().lower()

return QueryIntent(intent_str)This 50ms classification step saves me from expensive over-retrieval. For navigational queries, I can skip vector search entirely and use keyword matching. For troubleshooting queries, I prioritize recent documentation and error logs.

Cost Impact: This reduced my average retrieval costs by 40% by avoiding unnecessary vector searches.

Stage 2: Hybrid Search (The Secret Sauce)

Pure vector search is powerful but has blind spots. It struggles with:

- Exact matches (product codes, error messages, IDs)

- Acronyms and abbreviations

- Recent content (embeddings don’t capture recency)

I implement hybrid search combining three retrieval methods:

from typing import List, Dict

import asyncio

from dataclasses import dataclass

@dataclass

class SearchResult:

content: str

score: float

metadata: Dict

source: str # 'vector', 'keyword', or 'metadata'

class HybridRetriever:

def __init__(self, vector_store, keyword_index, metadata_db):

self.vector_store = vector_store

self.keyword_index = keyword_index

self.metadata_db = metadata_db

async def retrieve(

self,

query: str,

intent: QueryIntent,

top_k: int = 20

) -> List[SearchResult]:

"""

Parallel hybrid search with intent-based weighting.

"""

# Run all three searches in parallel

vector_task = self._vector_search(query, top_k)

keyword_task = self._keyword_search(query, top_k)

metadata_task = self._metadata_search(query, top_k)

vector_results, keyword_results, metadata_results = await asyncio.gather(

vector_task, keyword_task, metadata_task

)

# Weight results based on query intent

weights = self._get_weights_for_intent(intent)

# Reciprocal Rank Fusion (RRF) for combining results

combined = self._reciprocal_rank_fusion(

vector_results,

keyword_results,

metadata_results,

weights

)

return combined[:top_k]

def _get_weights_for_intent(self, intent: QueryIntent) -> Dict[str, float]:

"""Intent-based weighting for different search methods."""

weights = {

QueryIntent.FACTUAL: {

'vector': 0.7, 'keyword': 0.2, 'metadata': 0.1

},

QueryIntent.NAVIGATIONAL: {

'vector': 0.2, 'keyword': 0.6, 'metadata': 0.2

},

QueryIntent.PROCEDURAL: {

'vector': 0.6, 'keyword': 0.3, 'metadata': 0.1

},

QueryIntent.TROUBLESHOOTING: {

'vector': 0.5, 'keyword': 0.4, 'metadata': 0.1

}

}

return weights[intent]

def _reciprocal_rank_fusion(

self,

vector_results: List[SearchResult],

keyword_results: List[SearchResult],

metadata_results: List[SearchResult],

weights: Dict[str, float],

k: int = 60

) -> List[SearchResult]:

"""

Combine multiple ranked lists using RRF algorithm.

RRF(d) = Σ 1/(k + rank(d))

"""

scores = {}

# Score vector results

for rank, result in enumerate(vector_results):

doc_id = result.metadata.get('id')

scores[doc_id] = scores.get(doc_id, 0) + weights['vector'] / (k + rank)

# Score keyword results

for rank, result in enumerate(keyword_results):

doc_id = result.metadata.get('id')

scores[doc_id] = scores.get(doc_id, 0) + weights['keyword'] / (k + rank)

# Score metadata results

for rank, result in enumerate(metadata_results):

doc_id = result.metadata.get('id')

scores[doc_id] = scores.get(doc_id, 0) + weights['metadata'] / (k + rank)

# Sort by combined score

ranked_ids = sorted(scores.items(), key=lambda x: x[1], reverse=True)

# Return deduplicated results

results = []

seen_ids = set()

for doc_id, score in ranked_ids:

if doc_id not in seen_ids:

# Fetch full result (implementation depends on your storage)

result = self._fetch_result_by_id(doc_id)

result.score = score

results.append(result)

seen_ids.add(doc_id)

return resultsPerformance Impact: Hybrid search improved my answer accuracy by 35% compared to vector-only retrieval, measured by user satisfaction ratings.

Stage 3: Reranking (The Most Underrated Step)

Retrieving 20 potentially relevant chunks is good. Selecting the best 5 to send to the LLM is critical. This is where reranking shines.

I use a cross-encoder model for reranking—it’s slower than vector search but much more accurate for relevance scoring:

from sentence_transformers import CrossEncoder

import numpy as np

class Reranker:

def __init__(self):

# Load a cross-encoder trained for semantic similarity

self.model = CrossEncoder('cross-encoder/ms-marco-MiniLM-L-6-v2')

def rerank(

self,

query: str,

results: List[SearchResult],

top_k: int = 5

) -> List[SearchResult]:

"""

Rerank results using cross-encoder for better relevance.

"""

if not results:

return []

# Prepare query-document pairs

pairs = [[query, result.content] for result in results]

# Score all pairs

scores = self.model.predict(pairs)

# Sort by score

scored_results = [

(result, score)

for result, score in zip(results, scores)

]

scored_results.sort(key=lambda x: x[1], reverse=True)

# Update scores and return top k

reranked = []

for result, score in scored_results[:top_k]:

result.score = float(score)

reranked.append(result)

return rerankedLatency Trade-off: Reranking adds 100-200ms to query time, but it’s worth it. I’ve seen reranking improve answer quality by 25% in A/B tests.

Stage 4: Context Compression and Token Optimization

LLMs have token limits. Even with GPT-4’s 128k context window, you don’t want to waste tokens on irrelevant content. I implement context compression before sending to the LLM:

from langchain.text_splitter import RecursiveCharacterTextSplitter

from typing import List

class ContextCompressor:

def __init__(self, max_tokens: int = 4000):

self.max_tokens = max_tokens

self.splitter = RecursiveCharacterTextSplitter(

chunk_size=500,

chunk_overlap=50

)

def compress(

self,

query: str,

results: List[SearchResult],

llm_client: OpenAI

) -> str:

"""

Compress retrieved context to fit within token budget

while preserving relevance.

"""

# Combine all retrieved content

combined_context = "\n\n".join([

f"[Source: {r.metadata.get('source', 'unknown')}]\n{r.content}"

for r in results

])

# Estimate tokens (rough approximation: 1 token ≈ 4 chars)

estimated_tokens = len(combined_context) // 4

if estimated_tokens <= self.max_tokens:

return combined_context

# Use LLM to compress context while preserving key information

compression_prompt = f"""Given this query: "{query}"

Compress the following context to preserve only information relevant to answering the query.

Remove redundant information, but keep important details and citations.

Context:

{combined_context}

Compressed context:"""

response = llm_client.chat.completions.create(

model="gpt-4o-mini", # Cheap model for compression

messages=[{"role": "user", "content": compression_prompt}],

max_tokens=self.max_tokens,

temperature=0

)

return response.choices[0].message.contentCost Impact: Context compression reduced my LLM token usage by 60% while maintaining answer quality.

Vector Database Selection: The Decision Matrix

I’ve deployed RAG systems on Pinecone, Weaviate, pgvector, ChromaDB, and Qdrant. Here’s my decision matrix based on real production experience:

Pinecone

Best for: Serverless, hands-off scaling

Pros:

- Zero ops overhead

- Excellent performance (p95 latency < 50ms)

- Built-in hybrid search support

- Great documentation

Cons:

- Most expensive option ($70/month minimum for production)

- Vendor lock-in

- Limited control over infrastructure

My use case: Client projects where speed-to-market matters more than cost

pgvector (PostgreSQL extension)

Best for: Existing PostgreSQL users, cost optimization

Pros:

- Leverage existing database infrastructure

- Combine vector search with relational queries

- Cheapest option if you already run PostgreSQL

- Full control over deployment

Cons:

- Requires PostgreSQL expertise

- Slower at scale (p95 latency ~150ms at 1M+ vectors)

- Manual scaling and optimization

My use case: Projects with existing PostgreSQL infrastructure where cost optimization is critical

Weaviate

Best for: Complex filtering, multi-modal search

Pros:

- Built-in hybrid search

- GraphQL API

- Excellent filtering capabilities

- Open source with cloud option

Cons:

- Higher operational complexity

- Steeper learning curve

- Resource-intensive

My use case: Enterprise knowledge bases with complex metadata filtering

Qdrant

Best for: High-performance, self-hosted deployments

Pros:

- Fastest performance I’ve tested (p95 < 30ms)

- Excellent Rust-based architecture

- Great filtering and payload support

- Cost-effective for self-hosting

Cons:

- Smaller community than Pinecone/Weaviate

- Requires Kubernetes/container expertise

My use case: High-throughput RAG systems serving 100k+ queries/day

Chunking Strategy: The Underappreciated Art

Bad chunking ruins RAG systems. I’ve learned this the hard way.

Here’s my production chunking strategy:

from langchain.text_splitter import RecursiveCharacterTextSplitter

from typing import List, Dict

import re

class SmartChunker:

def __init__(

self,

chunk_size: int = 800,

chunk_overlap: int = 200,

min_chunk_size: int = 100

):

self.chunk_size = chunk_size

self.chunk_overlap = chunk_overlap

self.min_chunk_size = min_chunk_size

# Semantic separators (ordered by priority)

self.separators = [

"\n\n## ", # Markdown headers

"\n\n### ",

"\n\n", # Paragraph breaks

"\n", # Line breaks

". ", # Sentences

" ", # Words

"" # Characters (last resort)

]

def chunk_document(

self,

content: str,

metadata: Dict

) -> List[Dict]:

"""

Smart chunking that preserves semantic boundaries.

"""

#